Last week, a group of us from District Data Labs flew to Portland, Oregon to attend PyCon, the largest annual gathering for the Python community. We had a talk, a tutorial, and two posters accepted to the conference, and we also hosted development sprints for several open source projects. With this blog post, we are putting everything together in one place to share with those that couldn't be with us at the conference.

Tutorial: Natural Language Processing with NLTK and Gensim

Ben Bengfort (with assistance from Laura Lorenz) delivered a 3 hour tutorial on Natural Language Processing with NLTK and Gensim.

Abstract

In this tutorial, we will begin by exploring the features of the NLTK library. We will then focus on building a language-aware data product - a topic identification and document clustering algorithm from a web crawl of blog sites. The clustering algorithm will use a simple Lesk K-Means clustering to start, and then will improve with an LDA analysis using the popular Gensim library.

The Getting Started Guide for the tutorial can be found here, the slides can be found here, the IPython Notebooks can be found here, and the corpus can be downloaded from here.

Talk: Visual Diagnostics for More Informed Machine Learning

Rebecca Bilbro gave a talk about using data visualization for feature analysis, model selection and evaluation, and hyperparameter tuning in order to do more informed machine learning.

Abstract

Visualization has a critical role to play throughout the analytic process. Where static outputs and tabular data may render patterns opaque, human visual analysis can uncover volumes and lead to more robust programming and better data products. For Python programmers who dabble in machine learning, visual diagnostics are a must-have for effective feature analysis, model selection, and evaluation.

If you'd like to learn more after watching the talk, you can read the three-part blog post series she wrote on the topic.

- Part 1: Feature Analysis

- Part 2: Demystifying Model Selection

- Part 3: Visual Evaluation and Parameter Tuning

Posters

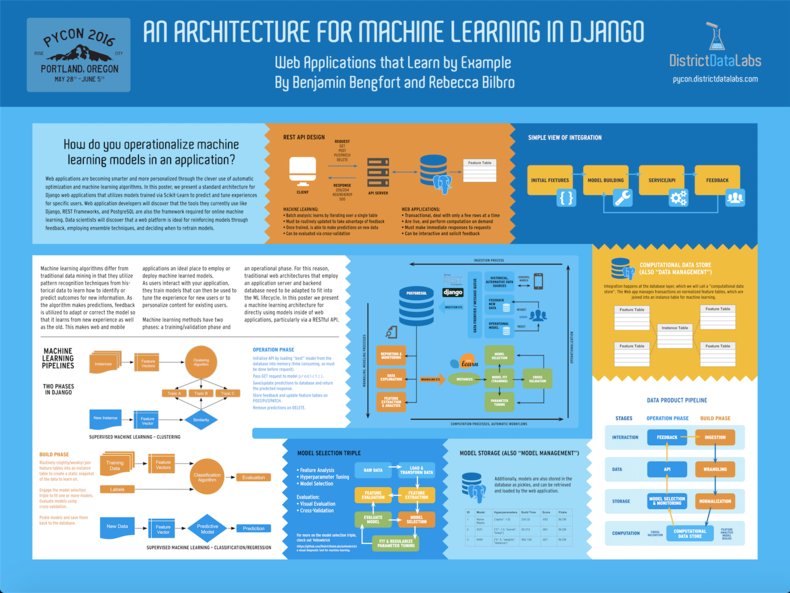

We also presented two posters: one on An Architecture for Machine Learning with Django and another one on Evolutionary Design of Particle Swarms. If you click on each poster below, it will take you to a high resolution image of it (give it a few seconds... it's big).

Sprints

Finally, we hosted sprints for three of our open source projects.

- Baleen: An automated ingestion service for blogs to construct a corpus for NLP research.

- Trinket: A multidimensional data explorer and visualization tool.

- Yellowbrick: A visual diagnostics API for machine learning.

You can click through each of the links above to the respective Github repos for the projects. We are always looking for additional contributors for all of these projects, so if you'd like to get involved, please reach out!

Conclusion

Well, there you have it, a complete list of all the materials we presented at PyCon. I want to thank all the folks who worked so hard to make PyCon a success for DDL and all those who came to learn at our tutorial, listen to our talk, discuss our posters, and code alongside us at the sprints. Thank you, and hope to see you again next year!

District Data Labs provides data science consulting and corporate training services. We work with companies and teams of all sizes, helping them make their operations more data-driven and enhancing the analytical abilities of their employees. Interested in working with us? Let us know!

Tony Ojeda - Founder of District Data Labs - Email